Thinking about dependencies

People tend to have lots of opinions about software dependencies. Here are mine.

Standing on the shoulders of giants#

At a high level, virtually all code in existence relies on tools and libraries written by other people. Even projects that don’t use any library dependencies rely on tools written by other people:

- compilers, interpreters and runtimes

- build systems

- version control

This is good. Depending on other people is how humans work.

But it’s possible to go too far. Dependencies may have:

- bugs, especially in uncommon and untested cases,

- security vulnerabilities, or

- malicious code introduced into them somewhere along the way.

I think there’s a balance to strike here. My heart favors dependencies, but my head recognizes that a lot of the concerns around them have merit.

Dependency management over time#

The oldest programming ecosystems, like those of C and C++, generally did not have any sort of dependency management tooling. Dependencies would generally be managed “out of band”, perhaps through a set of custom scripts or build instructions. This is still quite common on Linux with system-wide package managers like apt, and on Windows with DirectX runtimes. However, this model comes at a very steep cost: it is very hard to know exactly what code you are depending on. Version mismatches between libraries can lead to mysterious bugs; this model bakes in the assumption that software always gets better over time, but in the real world this is often not the case.

The other option is to vendor them into the repository: copy and paste a particular version of a dependency, effectively forking it and taking control over it. This means you know exactly what code is included; however, it makes it much harder to stay up to date with bugfixes and security patches from upstream developers.

There’s been endless debate about the relative merits of each approach, with Linux distributions taking firm stands, and plenty of flamewars over the decades1. It’s best to completely skip over it; neither is ideal, and we can do much better.

Requirements and constraints#

The next generation of ecosystems, such as Python’s pip and Haskell’s Cabal, made dependency management a bit more explicit. They consisted of three things:

- a global, internet-hosted package repository, such as PyPI

- a package manager: a client that could automatically download packages from the repository

- a way for developers to declare what third-party packages they require from the repository, along with a range of versions for each package.

This was a big improvement from before: instead of relying on custom scripts or forked versions of code checked into the repository, pip and Cabal provided a uniform way to handle them across projects.

Unfortunately, these tools did not address the biggest benefit of vendoring: knowing the exact versions of dependencies in use. Requirements files usually specified ranges of versions, and what version ultimately got used depended on what was available in the package repository when the tool was run.

Later tools like Ruby’s Bundler made the result of resolution explicit, writing out the results to a lockfile. For the first time, developers could have confidence that their package manager would behave deterministically across computers.

Diamond dependencies and npm#

One frequently-occuring issue these tools did not address is diamond dependencies. Let’s say that your code depends on two libraries, A and B. If both A and B depend on different versions of a library C, pip and Cabal would not be able to handle this situation; they would simply blow up.

Now there is nothing about this that is inherent to dependencies; it is quite possible for a system to be built such that multiple versions of a dependency can be used at the same time, and it just so happened that Python and Haskell weren’t able to. But the existence of this problem had two effects:

- it added a natural constraint to the size of a project’s dependency graph: the more dependencies you used, the more likely you were to run into this problem

- developers feared it, because it could happen as the result of any update to any dependency: there’s nothing that destroys confidence in a system like spooky action at a distance.

The first major attempt to solve this problem was in the node.js package manager, npm. Through a combination of tooling and runtime support, npm could manage multiple versions of a dependency at the same time. Unshackled, the power of dependencies was truly unlocked. npm projects quickly started growing thousands of them2.

The npm model had its own problems, though:

- It went too far in its quest to avoid diamond dependencies: even if

AandBdepended on the same version ofC, npm would store two separate versions of it. This would happen transitively, causing a combinatorial explosion in the number of dependencies used by a project. - One could pass around data structures between incompatible versions of

C, relying on JavaScript’s dynamic typing to hope that it all worked out. Opinions on how well it works are mixed. - There were also design flaws in how npm’s package repository was run, the most famous of which resulted in the left-pad incident. A single developer deleted their package from the node.js package repository, bringing many projects’ build systems grinding to a halt. This was really, really bad and should have never been possible.

Cargo#

Rust’s package manager, Cargo, incorporates most of the learnings of earlier systems while choosing to do a few things its own way3. A quick survey of its design decisions:

Knowing the exact versions of dependencies in use. Cargo’s lockfiles record the exact versions of every third-party library in use, and go even further by recording hashes of their source code. These lockfiles should be checked into your repository. I disagree with Cargo’s recommendation4 to not check in its lockfile for libraries—everyone should know exactly what third-party code is included, so everyone should check in lockfiles. (They should also keep those lockfiles up-to-date with a service like dependabot).

Combinatorial explosion of dependencies. Cargo pulls back on npm’s model a bit: it will unify dependencies up to semver compatibility:

- If library

Adepends on version 1.1 ofCandBdepends on 1.2, Cargo will use a single version ofCthat satisfies the requirements of bothAandB. - If

Adepends on version 1.1 ofCandBdepends on 2.0, Cargo will include two separate versions ofC.

This reduces the combinatorial explosion of dependencies seen in systems like npm to a linear explosion. Much more reasonable.

Incompatible data structures. Rust treats different versions of C as completely different, even if the types in them have the same names and structures. The only way to pass around data from C 1.1 to C 2.0 is to do an explicit conversion. Rust’s static type system allows this detection to be done at compile-time.

This model works in conjunction with the dependency unification described above.

left-pad. The crates.io package repository guarantees that code that’s been uploaded to it is never deleted (though it may be yanked). This is a basic guarantee that every internet-hosted package repository should provide.

Control#

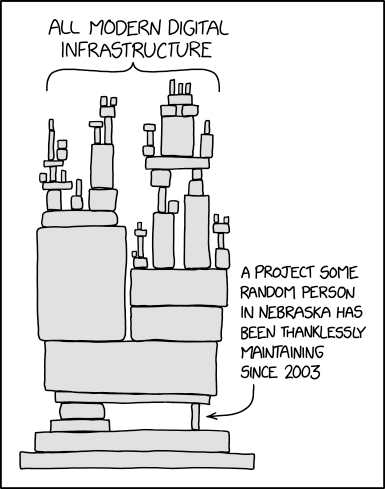

By addressing the technical issues that earlier systems had, Cargo foregrounds some very hard questions about human society. Depending on other people is scary. Across larger society we’ve built good models for being discerning about it, but we still need to refine them a bit for the online world.

Here are some arguments that look technical at the surface, but are really about trusting other people.

We don’t want our builds to ever hit the internet. This is a reasonable request, especially for entities that host their own source control. Cargo supports vendoring dependencies, storing them in the repository while still treating them as third-party code. Lockfile hashes ensure that third-party code isn’t accidentally changed.

But in emergencies, we may have to make urgent bugfixes. Dependencies make that harder. I’ve had to deal with this myself—after all, in emergencies, being autonomous is important.. The maintainers may not be as responsive as your schedule needs5. As an alternative, Cargo allows workspaces to patch dependencies.

It’s not just emergencies. Dependencies fail to work properly in edge cases, and our situation is an edge case. Rachel Kroll talks more about this. She’s right—lots of code works fine for most people, but has bugs when run in edge case environments like Facebook’s datacenters. If you’re one of the people it doesn’t work for, you have three options:

- Contribute improvements to the dependency, provided the maintainers are willing to accept your changes. We did this for many years with Mercurial, for example.

- Fork the dependency. You don’t have to work with the maintainers, but you do have to spend effort keeping up to date with bugfixes and security updates.

- Rewrite the code from scratch. You’ll have complete control over your code, at the cost of potentially ending up with a different set of bugs.

All of these options have tradeoffs. Depending on the facts, any of them, or something in between, may be the right thing to do. For example, you may be able to rewrite some code from scratch while copying over tests from a dependency. Do whatever makes the most sense for the situation.

We don’t know what dependencies are actually included in our code, we don’t have the time to review everything, and we can’t keep up with updates. Supply chain attacks are real and are becoming more common over time.

In general, having fewer dependencies certainly helps manage supply chain attacks. But rewriting code may also introduce its own bugs and vulnerabilities.

For large projects, it also makes sense to carve out a subset of your system as high-security, then have a higher bar for adding dependencies to it. This is a healthy way to channel concerns about dependencies into something that I think is quite constructive.

I think this concern gets at the biggest unsolved technical problem with dependencies, which is that they aren’t legible enough. I think solving this is worthwhile, and cargo-guppy is my attempt to do so. It provides a query interface over a Cargo dependency graph, telling you precisely what dependencies are included in a particular build target. This can then be used to carve a large codebase into several subsets, for example:

- a high-security subset as mentioned above, requiring the greatest degree of care

- the full set of binaries that form the production system, forming a superset of 1

- management and administration tools for the system

- developer tools, linters, and CI automation scripts.

My coworker David Wong wrote cargo-dephell, which uses guppy along with several other technical and social signals and presents the information as a web page.

Who can you believe?#

My understanding from listening to the more serious arguments against dependencies is that:

- people generally trust themselves most of all

- they trust the processes of programming languages a lot, and random third parties not so much (with possible exceptions for popular projects like parts of C++’s Boost).

There’s a lot of reasonable positions to take here, and here’s how I think about some of them.

We only trust code that we wrote ourselves. Very few entities are in the position of:

- being able to write everything from scratch, including their compiler, build system and source control

- genuinely being able to trust internal talent over the collective wisdom gathered in an open source project

But some entities certainly are, and if you’re one of them, go for it.

OK, maybe not everything, but we only trust libraries written by ourselves. You’re still going to be relying on a compiler written by other people. Compilers are very complicated. They themselves depend on libraries written by other people, such as lexers, parsers, and code generation backends.

Lots of people use the same compilers and source control systems. We’re comfortable making an exception for them. This is a reasonable stance. Compilers and source control form clear abstraction boundaries. Reflections on Trusting Trust is part of computer security folklore, but I’m quite confident that the latest version of the Rust compiler doesn’t have a Trojan horse in it.

One way to be more careful is by using diverse double-compiling, or generally maintaining your own lineage of compiler binaries—I know of several entities that do this internally.

But compilers also come with a standard library, such as Rust’s std. This brings up a set of important governance arguments that are worth looking at.

The standard library should include everything, eliminating the need for third-party dependencies. Some languages like Python follow this “batteries included” approach, but in practice it has proven to be quite problematic.

Standard libraries are meant to be stable. Once they ship, most interfaces can never be changed, even if real-world usage exposes issues with them. Adding too many features to the standard library also stifles innovation, because why use a third-party dependency that’s only a little bit better when the standard library is right there? Experience has shown that Python’s batteries are leaking.

Put another way, adding something to the standard library is an irreversible type 1 decision. Put yet another way, a stable system is a dead system.

In contrast, Rust chooses to have a lean standard library, relying on Cargo to make it easy to pull in other bits of code. This lets developers experiment and come up with better APIs before stabilizing them. The most important ones ultimately make it into the standard library. I believe that this is the right overall direction.

Too much choice is bad! It makes it harder for us to make decisions about which library to pick. This is a pretty strong argument for a batteries-included approach. There is a real tradeoff between innovation and standardization, with no easy answers. My personal opinion is that:

- Python’s standard library is too big.

- Rust’s standard library is a bit too small, and some third-party code would be better off being in the standard library.

- It is better to err on the side of being too small than too big, because of the stability concerns above.

The advance Cargo made over earlier systems was to bring both options to a level playing field6, so we can grapple with the complex social issues more directly.

We’re willing to trust “third-party” dependencies that language maintainers have taken over ownership of. This is great! Bringing more code under unified governance would be wonderful for popular dependencies to the extent that language maintainers have the capacity to do so. But they only have a fixed amount of time, and maintaining dependencies may not always be the best use of their skills. This problem is more acute for languages without large amounts of corporate funding: The Go language team can afford to maintain a large number of not-quite-standard libraries under /x, but the Rust nursery is less resourced.

Towards a trust model for third-party code#

If you’re willing to trust the maintainers of a language, are you also willing to extend that trust to some other people? Assuming that you are, what sorts of facts are worth looking at and how should one evaluate them? There aren’t easy answers here, but some questions that may help:

How complex is the dependency? The harder the problem it solves, the more useful it is—the more likely that it captures the collective wisdom of an open source community.

Has the project gotten the technical fundamentals right? How much automation does it use? How well-tested is it? Does it make its quality processes easy to understand? Does it only use superpowers like Rust’s unsafe to the extent that it needs to?

How well-known is the project? How many contributors has the project had over time? Is the project used by other dependencies you trust? More popular code will have more eyes on it.

How well-run is the project? Do the maintainers respond to bug reports promptly? What sorts of code review processes do they have? Do they have a code of conduct governing social interactions? What about other projects by the same people?

Are the maintainers’ interests aligned with yours? Are they open to your contributions? How promptly do they accept bugfixes or new features? If you need to make substantial changes to a project that cause it to diverge from the maintainers’ vision, forking or rewriting it might be the right call.

For example, David Tolnay7 is a well-known figure in the Rust community and the author of many widely-used Rust libraries. I’m happy to extend the same level of trust to him that I do to the Rust language team.

Conclusion#

By addressing most of the technical issues involved in managing third-party dependencies, the Cargo package manager has brought a number of complex social problems to the fore. The implicit trust models present in earlier systems no longer work; evaluating third-party dependencies requires new models that combine technical and social signals.

Thanks to David Wong, Brandon Williams, Rex Hoffman, Inanna Malick, and kt for reviewing drafts of this post. Any issues with this post are solely my fault.

Some of which I took part in myself back in the day. All those old IRC logs are lost in time, I hope. ↩︎

The npm community has a norm to split a library up into lots of reusable components. I think a few moderately-sized libraries are better than lots of small ones. I have many other thoughts about library size, coupling and API boundaries, but this margin is too narrow to contain them. ↩︎

Cargo isn’t perfect, however, and its dependency model still has a few technical problems. The biggest ones I’ve noticed are a lack of distributed caching leading to long build times for large dependency chains, and some more spooky action at a distance through feature unification. ↩︎

Added 2020-08-26: I think there are two justifiable positions here:

Check in the lockfile, keep it up to date, and also update the version ranges specified in e.g.

Cargo.tomlfiles. This effectively adds a “meta-spec” on top of what Cargo.toml specifies, and each release captures what the latest versions of each dependency were at that time.If you care most about developers and CI building and testing the same code as much as possible, and are fine with applying gentle pressure on downstream developers to keep all their dependencies up-to-date, you may prefer this approach.

Don’t check in the lockfile, specify the oldest required version instead of the newest one, and rely on the community to “fuzz” versioning because different developers will have different local versions of dependencies.

If you care about the fact that external developers are going to use a range of versions against your code in practice, and wish to provide maximum flexibility, you may like this way of doing things more.

I personally prefer the first approach, but the other way is fine too. My only request is that if you do, please consider building tooling to test against the oldest versions you actually declared!

Thanks to Henry de Valence for engaging with me on this. ↩︎

Nor would it be reasonable to expect them to be, unless you have a support contract with them. ↩︎

Well, mostly. The standard library is still special in some ways. ↩︎

Disclosure: David and I currently work at the same place, and we occassionally collaborate. ↩︎